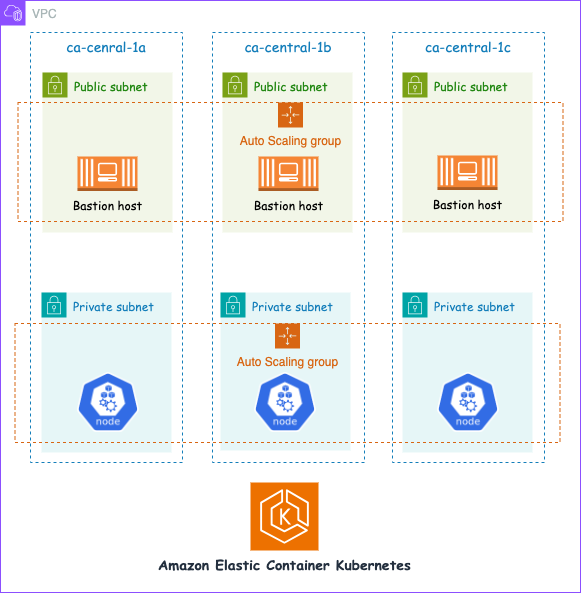

In this exercise, I will deploy an AWS Elastic Kubernetes Service (EKS) cluster using Terraform and configure kubectl to verify the cluster is ready to use. Kubernetes is an open-source container orchestration system used to manage and deploy containerized applications. A Kubernetes cluster is a collection of nodes that run containerized applications. With AWS EKS, I can run my Kubernetes applications on AWS using their Elastic Compute Cloud (EC2) and take advantage of its scalability and availability using services such as application load balancers (ALB) and auto scaling group (ASG).

Preparing Terraform Configuration Files

This configuration will creates a VPC to provision an EKS cluster with the following architecture:

A vpc is created with three public subnets and three private subnets across two availability zones:

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "3.14.2"

name = "terraform-eks-vpc"

cidr = "10.0.0.0/16"

azs = slice(data.aws_availability_zones.available.names, 0, 3)

private_subnets = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24"]

public_subnets = ["10.0.4.0/24", "10.0.5.0/24", "10.0.6.0/24"]The EKS cluster will contain three nodes distributed across two node groups, with each compute node being of t2.micro type:

eks_managed_node_groups = {

one = {

name = "node-group-1"

instance_types = ["t2.micro"]

min_size = 1

max_size = 3

desired_size = 2

}

two = {

name = "node-group-2"

instance_types = ["t2.micro"]

min_size = 1

max_size = 3

desired_size = 2

}

}Running the Terraform Code

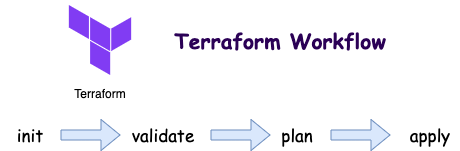

A terraform workflow consists of four main steps:

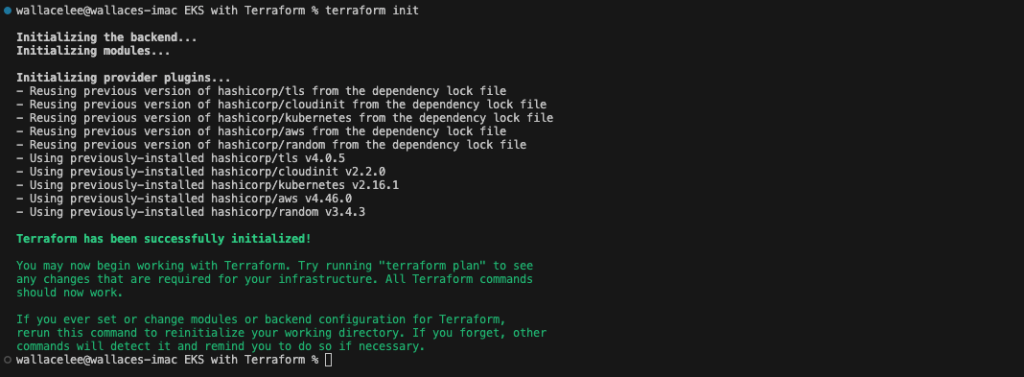

Init – Prepare the workspace and download the required modules and providers so Terraform can apply the configuration I have defined in various configuration files, run this command:

$ terraform init

Validate – Terraform will verify the syntax of your terraform configuration files

$ terraform validate

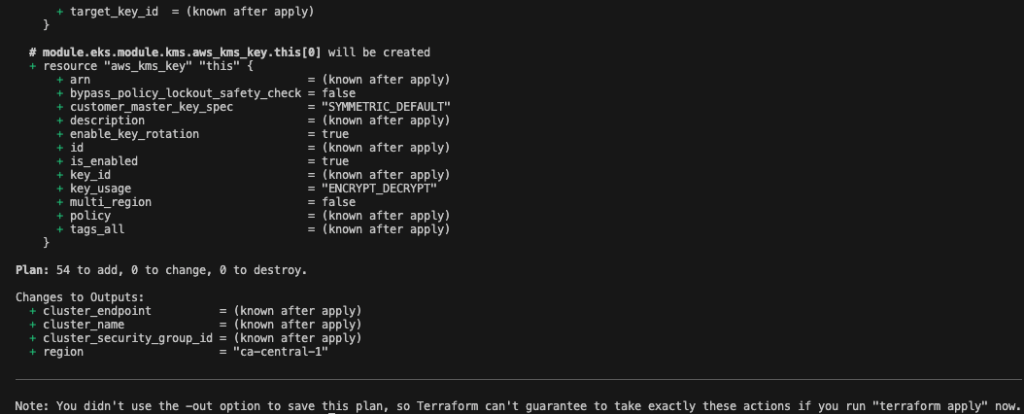

Plan – It allows you to preview the changes Terraform will make before apply them

Apply – Terraform will deploy the changes by creating or updating your resources in AWS

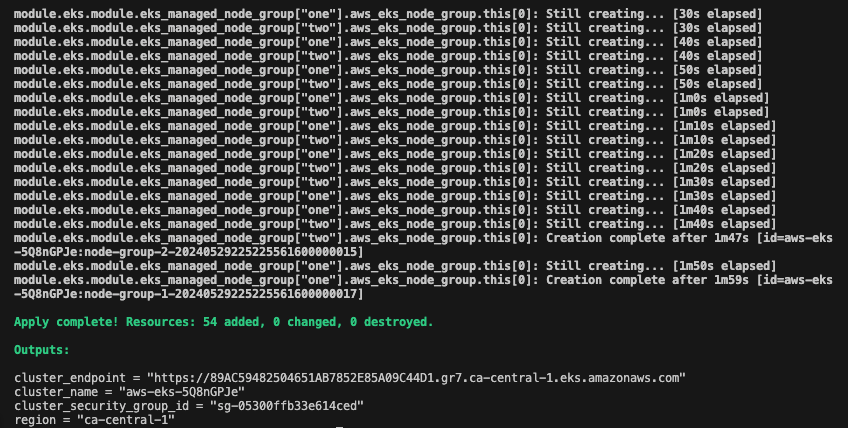

$ terraform apply

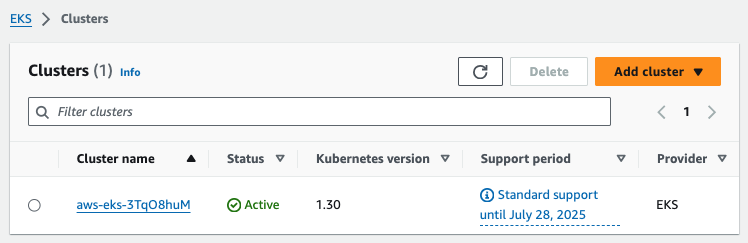

It takes about 10-15 minutes to create the cluster. When it is completed, I can see my EKS cluster in AWS console.

Configure KubeCTL

Kubernetes provides a command line tool called kubectl for communicating with a Kubernetes cluster’s control plane, using the Kubernetes API. To use it first we need to a configuration file kubeconfig:

$ aws eks update-kubeconfig --region region_name --name cluster_nameTo validate and test my configuration to the master node:

$kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 5h39mTo view the status of my nodes:

$kubectl get nodes custom-columns=Name:.metadata.name,nCPU:.status.capacity.cpu,Memory:.status.capacity.memory

Name nCPU Memory

ip-10-0-1-150.ca-central-1.compute.internal 1 2031268Ki

ip-10-0-1-189.ca-central-2.compute.internal 1 2031268Ki

ip-10-0-2-241.ca-central-2.compute.internal 1 2031268Ki

ip-10-0-2-172.ca-central-2.compute.internal 1 2031268KiDestroy the EKS Cluster

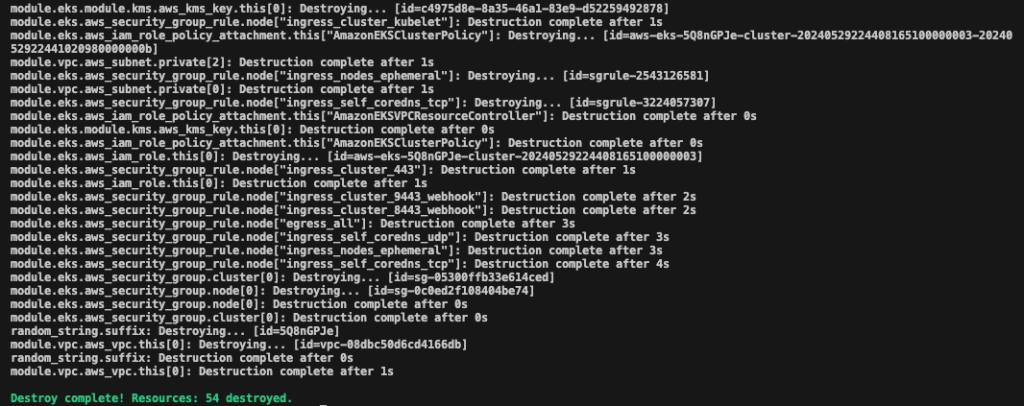

To destroy the whole infrastructure that Terraform has created, simply use the following command:

$ terraform destroy

You can find my configurations at GitHub