In my previous blogs, I explored how to enhance observability in AWS environment by ingesting logs and metrics to Dynatrace. Now I will dive deeper into one of Dynatrace’s most revolutionary features: Dynatrace Grail. This cutting-edge observability data lakehouse not only unifies logs, metrics, and traces into a single platform but also leverages AI-driven analytics to deliver real-time insights and automated root cause analysis. In this blog, I’ll go beyond basic integrations and focus on the unique value Grail brings to observability, especially when compared to AWS CloudWatch and OpenSearch. I’ll explore how Grail’s Dynatrace Query Language (DQL), real-time ingestion, and AI-powered automation can dramatically improve operational efficiency and reduce the time to resolve incidents.

What is Dynatrace Grail?

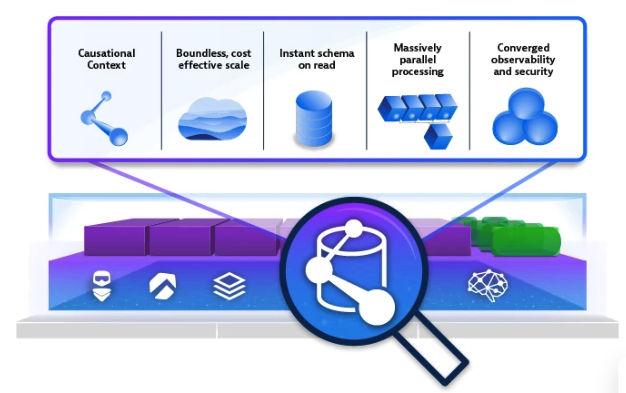

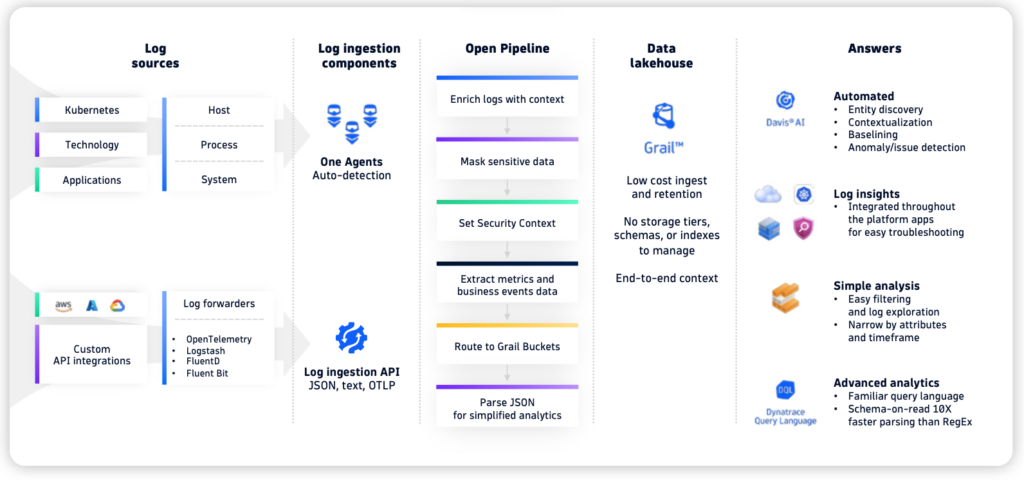

Dynatrace Grail is the backbone of Dynatrace’s observability platform, designed to unify all your observability data—logs, metrics, traces, events, and security information—into a single, queryable data lakehouse. Unlike traditional log management tools that handle only logs, Grail integrates all observability data types into one system. This allows for real-time ingestion, instant querying, and AI-powered root cause analysis, all from a single platform.

Key Features of Dynatrace Grail:

- Real-Time Ingestion: Seamlessly ingests logs, metrics, and traces from multiple sources in real time.

- AI-Powered Insights: Uses Davis AI for automated anomaly detection and root cause analysis.

- Querying and Analysis: Leverages the Dynatrace Query Language (DQL) to query across logs, metrics, and traces.

- Scalable Log Analytics: Designed for dynamic, cloud-native environments, offering real-time scalability without manual reconfiguration.

Let’s break down these features to have a deeper understanding of Dynatrace Grail with a comparison of similar services from AWS.

1. Real-Time Ingestion and Unified Observability

AWS CloudWatch:

- CloudWatch ingests logs and metrics from AWS services, applications, and on-prem systems, but it splits metrics and logs into separate services (CloudWatch Logs and CloudWatch Metrics).

- While AWS CloudWatch is powerful for monitoring individual AWS resources, correlating logs and metrics often requires multiple tools and a fair amount of manual effort. For instance, you need to link CloudWatch with X-Ray for tracing, or with AWS Lambda for serverless monitoring.

Dynatrace Grail:

- Grail ingests logs, metrics, and traces in real time and stores them in a unified data lake.

- With automatic correlation between these data types, Grail eliminates the need for manual integration between different monitoring services. For example, a spike in CPU usage is automatically linked to related logs and traces, giving you an immediate holistic view of the issue.

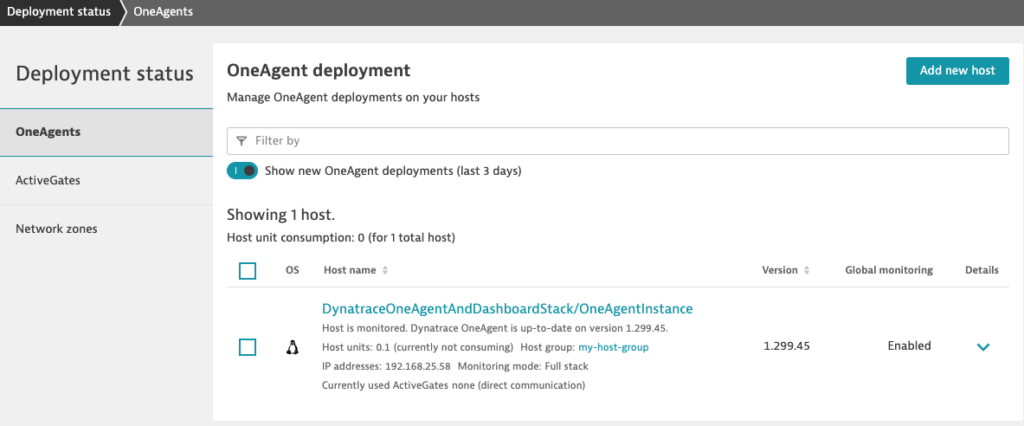

To see this in action, let’s deploy a Dynatrace OneAgent to an AWS EC2 instance to collect the host metrics using AWS CDK:

import * as cdk from 'aws-cdk-lib';

import { Stack, StackProps } from 'aws-cdk-lib';

import { Vpc, Instance, InstanceType, AmazonLinuxImage, AmazonLinuxGeneration, SecurityGroup, Subnet } from 'aws-cdk-lib/aws-ec2';

import { Role } from 'aws-cdk-lib/aws-iam';

import { Construct } from 'constructs';

import * as secretsmanager from 'aws-cdk-lib/aws-secretsmanager';

import * as custom_resources from 'aws-cdk-lib/custom-resources';

import * as iam from 'aws-cdk-lib/aws-iam';

export class DynatraceOneAgentAndDashboardStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const region = "us-west-2"; // Replace with your region

// Import the existing VPC

const vpc = Vpc.fromLookup(this, 'vpc', {

vpcId: 'vpc-xxxxxxxxxxxxxxxxx', // Replace with your VPC ID

});

// Lookup the existing security group by ID

const securityGroup = SecurityGroup.fromSecurityGroupId(this, 'MySG',

'sg-xxxxxxxxxxxxxxxxx'); // Replace with your security group ID

// Use an existing IAM role for the EC2 instance

const role = Role.fromRoleArn(this, 'InstanceRole', 'arn:aws:iam::xxxxxxxxxxxx:instance-profile/YourRoleName'); // Replace with your IAM role ARN

// Define the EC2 instance with Amazon Linux

const instance = new Instance(this, 'OneAgentInstance', {

vpc,

instanceType: new InstanceType('t2.micro'),

machineImage: new AmazonLinuxImage({

generation: AmazonLinuxGeneration.AMAZON_LINUX_2,

}),

securityGroup: securityGroup,

role: role,

vpcSubnets: {

subnets: [

Subnet.fromSubnetAttributes(this, 'MySubnet', {

subnetId: 'subnet-xxxxxxxxxxxxxxxxx', // Replace with your subnet ID

availabilityZone: 'us-west-2a',

}),

],

},

});

// User Data script for installing Dynatrace OneAgent

const userDataScript = `

#!/bin/bash

yum update -y

yum install -y wget aws-cli

mkdir -p /opt/dynatrace

cd /opt/dynatrace

# Retrieve Dynatrace download token from Secrets Manager and extract it using sed

DT_TOKEN=\$(aws secretsmanager get-secret-value --secret-id dynatrace-secret --query SecretString --output text --region us-west-1 | sed 's/.*"token":"\\([^"]*\\)".*/\\1/')

# Proceed with the download and installation

wget -O Dynatrace-OneAgent-Linux-1.299.45.20240924-123410.sh "https://your-dynatrace-url.com/api/v1/deployment/installer/agent/unix/default/latest?arch=x86" --header="Authorization: Api-Token \$DT_TOKEN"

sudo /bin/bash Dynatrace-OneAgent-Linux-1.299.45.20240924-123410.sh --set-monitoring-mode=fullstack --set-app-log-content-access=true --set-host-group=my-host-group --set-host-tag=environment:prod

`;

instance.addUserData(userDataScript);

new cdk.CfnOutput(this, 'InstanceId', {

value: instance.instanceId,

description: 'The ID of the EC2 instance running Dynatrace OneAgent',

});

}

}

Once the OneAgent is deployed, it automatically discovers and collects all relevant monitoring data like cpu usage, network health, processes, and services on your host.

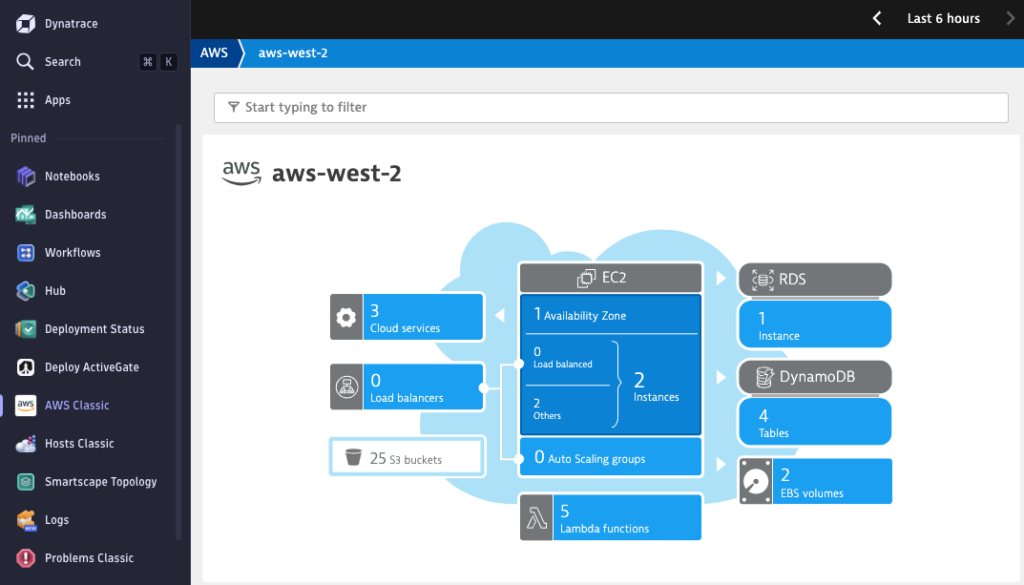

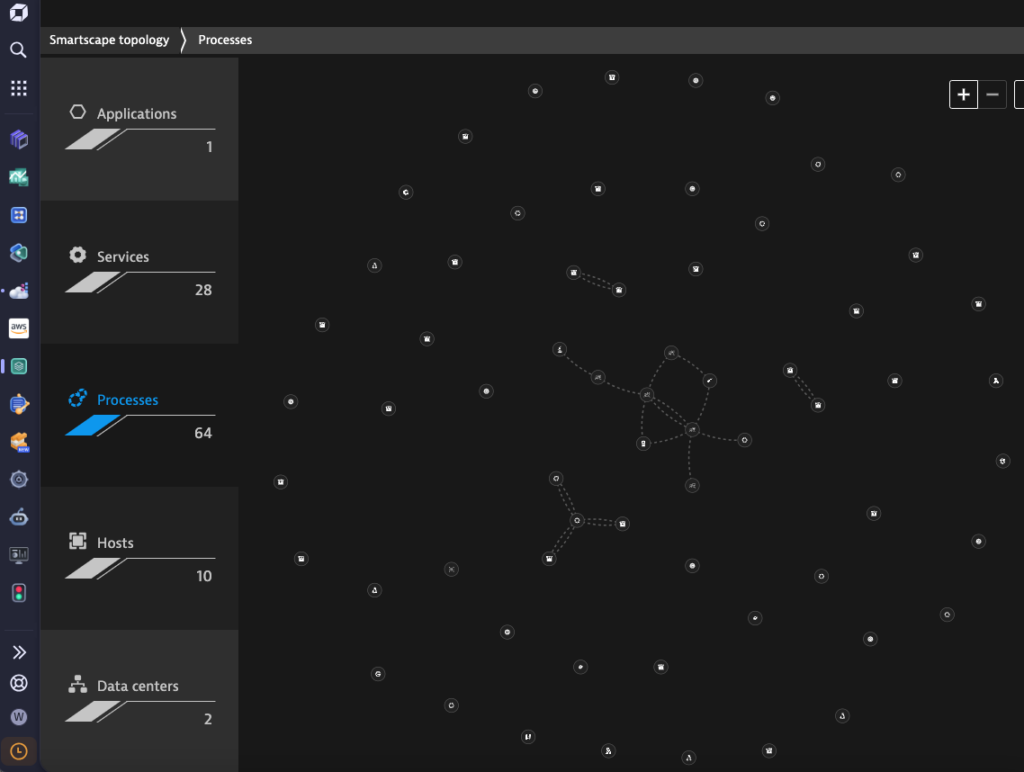

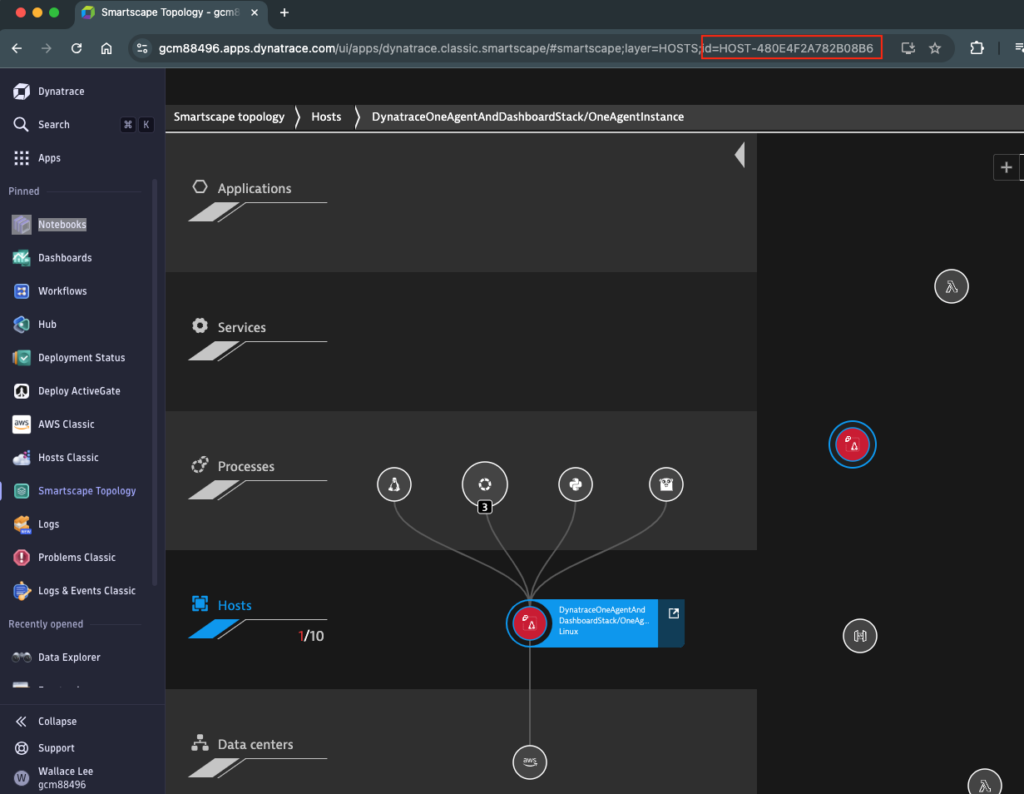

Navigate to Smartscape Topology in the Dynatrace UI to view your AWS host, processes and its dependencies in real time, without manual configuration.

2. AI-Powered Root Cause Analysis with Davis AI

AWS CloudWatch:

- In AWS, you can use CloudWatch alarms and basic anomaly detection to alert you when metrics deviate from thresholds. However, identifying the root cause still involves manually piecing together data from CloudWatch Logs, X-Ray, and other AWS services.

With Dynatrace Grail:

- Dynatrace’s Davis AI goes beyond basic alerting by automatically correlating data across logs, metrics, and traces to identify the root cause of an issue. This is a massive time-saver, especially in complex environments where problems often span multiple services.

To simulate a performance issue in my application, I will run a simple python script (cpu.py) to stress the CPU usage to 90% on a EC2 instance:

import multiprocessing

import time

def cpu_load():

while True:

# Busy-wait for 0.9 seconds (90% CPU usage)

end = time.time() + 0.9

while time.time() < end:

pass

# Sleep for 0.1 seconds (10% idle)

time.sleep(0.1)

if __name__ == "__main__":

# Run the load on multiple CPU cores (adjust as necessary)

for i in range(multiprocessing.cpu_count()):

process = multiprocessing.Process(target=cpu_load)

process.start()

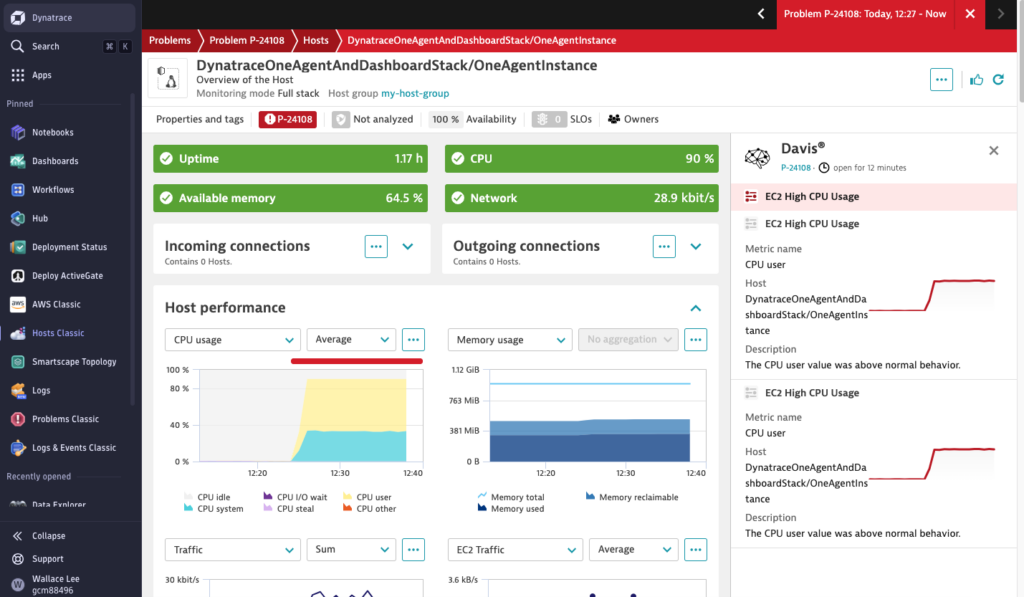

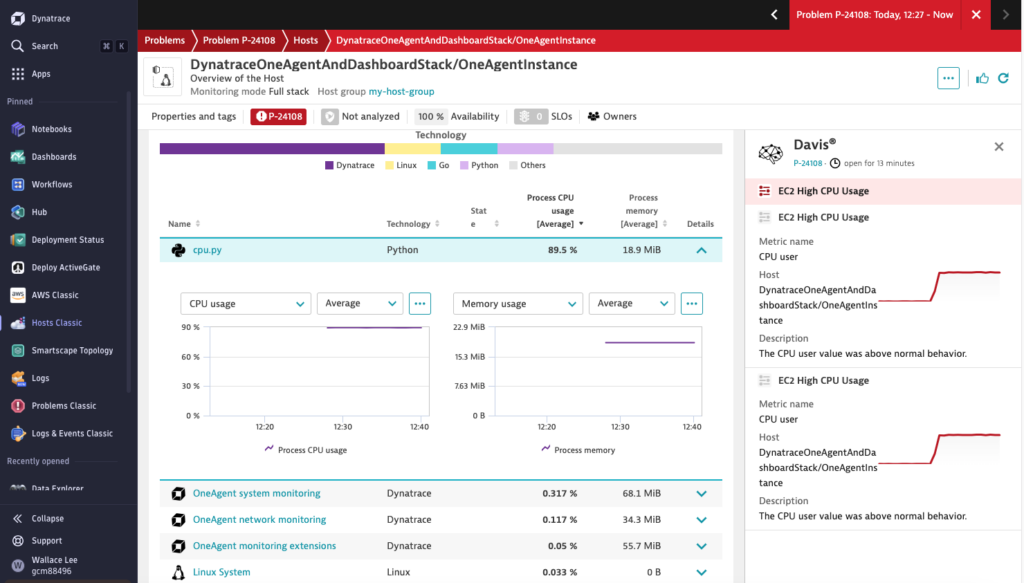

Open Dynatrace Hosts Classic and after awhile the Davis AI will automatically detect the anomaly. It provides a root cause analysis, showing the metric and host that contributed to the issue.

From the same page, we can easily identify the python script ‘cpu.py’ is causing the high CPU usage. Davis AI automates what would otherwise require hours of manual log parsing and metric correlation and drastically reducing your MTTR (Mean Time to Resolution).

3. Dynatrace Query Language (DQL): Unlocking the Power of Unified Data

AWS CloudWatch:

When using AWS CloudWatch Logs, you have CloudWatch Insights for querying logs, CloudWatch Metrics for querying metrics, and X-Ray for traces. Each of these requires its own interface and query language, making cross-data queries difficult.

Dynatrace Grail:

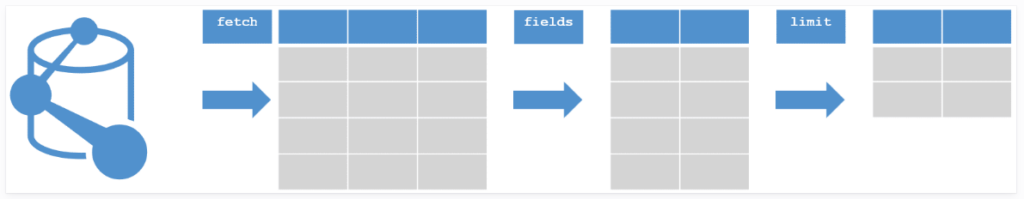

Dynatrace introduces DQL (Dynatrace Query Language), a single language to explore, query and process all data persisted in Dynatrace Grail. DQL is a pipeline based data processing language where you can define a set of commands that follow each other where the data is processed step by step. Each command returns an output containing a set of records and that output becomes the input of the next command. You continue this process until you are satisfied with the analysis results.

Let’s do a simple DQL to fetch the number of problem events that happened over time for the aforementioned EC2 host. First, I go to Smartscape Topology to find out the host id from the URL:

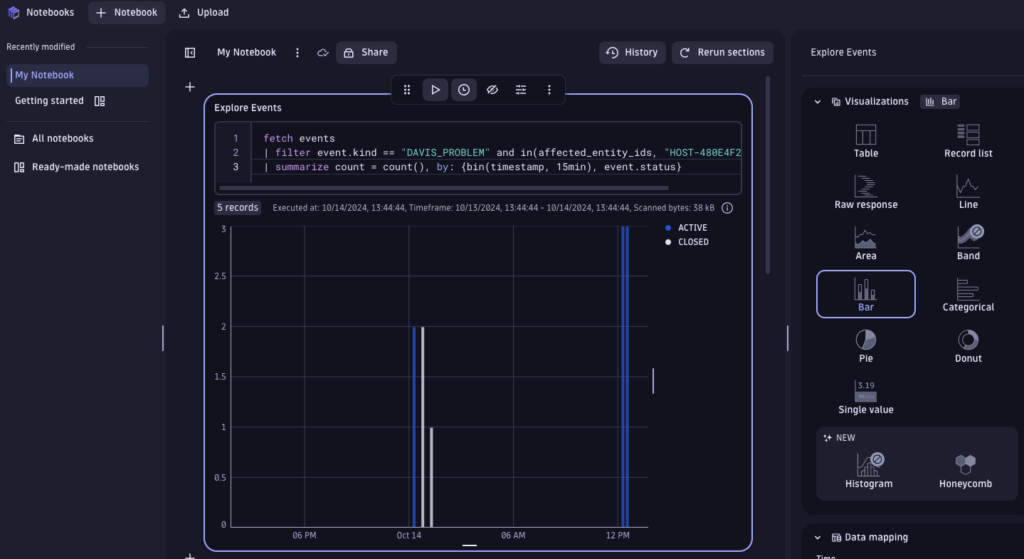

Then go to Notebook and create a DQL. This query fetches the number of problematic events that happened on my EC2 host with id HOST-480E4F2A782B08B6 and their status. With the visualization option, I can choose to visualize the results in a bar chart.

fetch events

| filter event.kind == "DAVIS_PROBLEM" and in(affected_entity_ids, "HOST-480E4F2A782B08B6")

| summarize count = count(), by: {bin(timestamp, 15min), event.status}

4. Scalable Log Analytics Without Indexes or Schemas

AWS OpenSearch:

In a log management system like AWS OpenSearch, data ingestion requires effort in setup to achieve efficient querying and analysis. OpenSearch is based on Elasticsearch and uses indexing and schema definitions to store and organize data. While OpenSearch is a powerful, fully managed service, users must often manually configure index mappings and schemas to optimize search performance and ensure logs, metrics, and traces are stored in the correct format.

- Indexing: OpenSearch requires building indexes to structure and store data. These indexes are created based on the schema you define, which must be updated as new data sources or formats are introduced.

- Schema Definition: In OpenSearch, defining the correct schema upfront is important to ensure that fields are correctly indexed for efficient querying. If the schema isn’t set properly, queries can become slow or return incomplete results.

- Limitations: While AWS OpenSearch is fully managed, managing indexes and schemas still requires significant planning and ongoing maintenance. This can be cumbersome in dynamic, cloud-native environments where services scale frequently, and new data types constantly emerge.

Dynatrace Grail:

Dynatrace Grail eliminates the need to build indexes or define schemas manually. It offers schema-on-read functionality, allowing you to ingest logs, metrics, and traces and immediately query the data without the overhead of defining how that data should be stored. This makes Grail a more scalable and flexible solution, especially in environments where data structures frequently change.

- No Indexing or Schema Definition Required:

- Unlike OpenSearch, where users must predefine indexes and schemas, Grail dynamically understands and organizes your observability data (logs, metrics, and traces) as it ingests it. This makes it easier to handle new data types and instantly start querying without worrying about the underlying data structure.

- Real-Time Data Ingestion:

- With Grail, data is immediately available for querying upon ingestion. You don’t need to wait for indexing jobs to finish, which can be a bottleneck in OpenSearch when dealing with high data volumes or complex queries.

- Whether you’re ingesting logs from microservices or metrics from infrastructure, Grail ensures that you can access and query this data in real-time, enabling faster troubleshooting and performance analysis.

- Unified Data Ingestion:

- Grail unifies logs, metrics, and traces into one Data lakehouse, allowing for cross-data querying without needing separate indexes for each data type. OpenSearch, on the other hand, requires separate index setups for each data type, with separate mapping and storage requirements for logs, metrics, and traces.

- Seamless Scalability:

- Grail is built to scale automatically with your environment. As your data grows, the platform seamlessly adapts, providing fast query performance without manual reconfiguration.

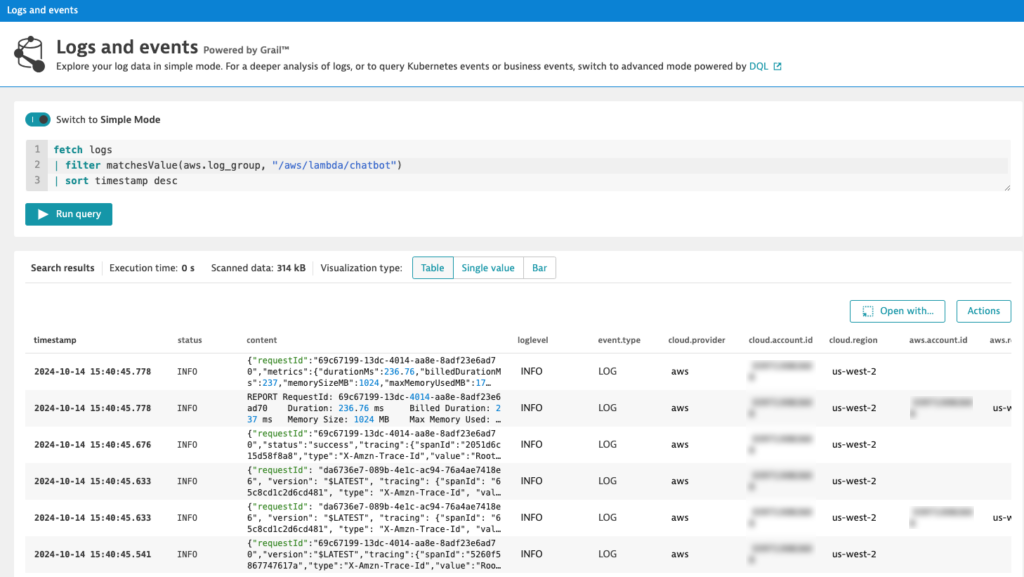

Let’s use DQL to demonstrate how to query logs in JSON format and extract specific attributes, such as the user’s question and the chatbot’s answer, without the need to build indexes or define schemas. In this example, I’ve already ingested the logs from my chatbot Lambda function into Dynatrace. These logs capture the interaction between users and the chatbot, specifically showing what users ask and how the chatbot responds.

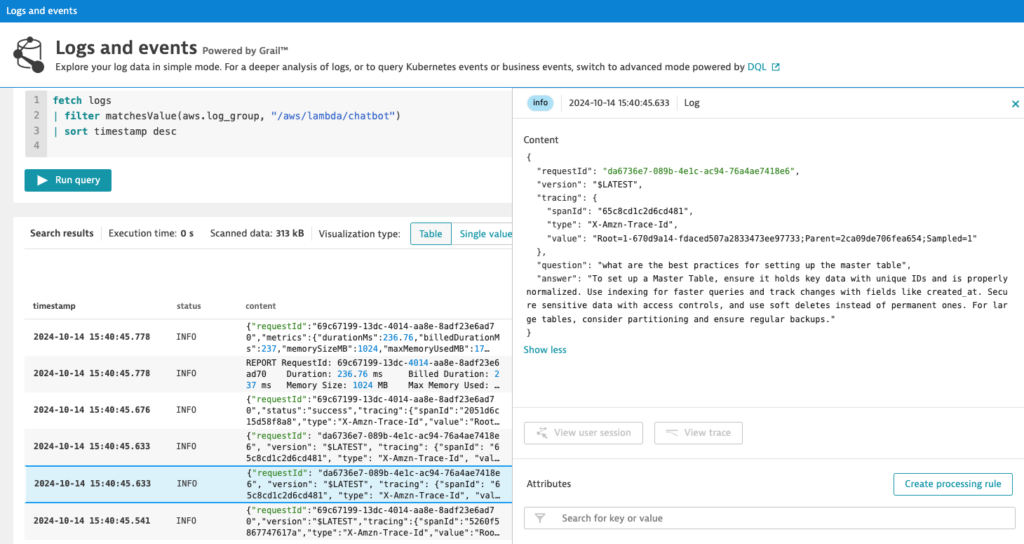

fetch logs

| filter matchesValue(aws.log_group, "/aws/lambda/chatbot")

| sort timestamp desc

Since the content is in JSON format, I can parse the content using "json:chat" and extract only the attributes question and answer I am interested by creating objects representing those attributes like chat[question] and chat[answer]. The parse json command dynamically understands the structure of the log data so you don’t have to define a schema upfront.

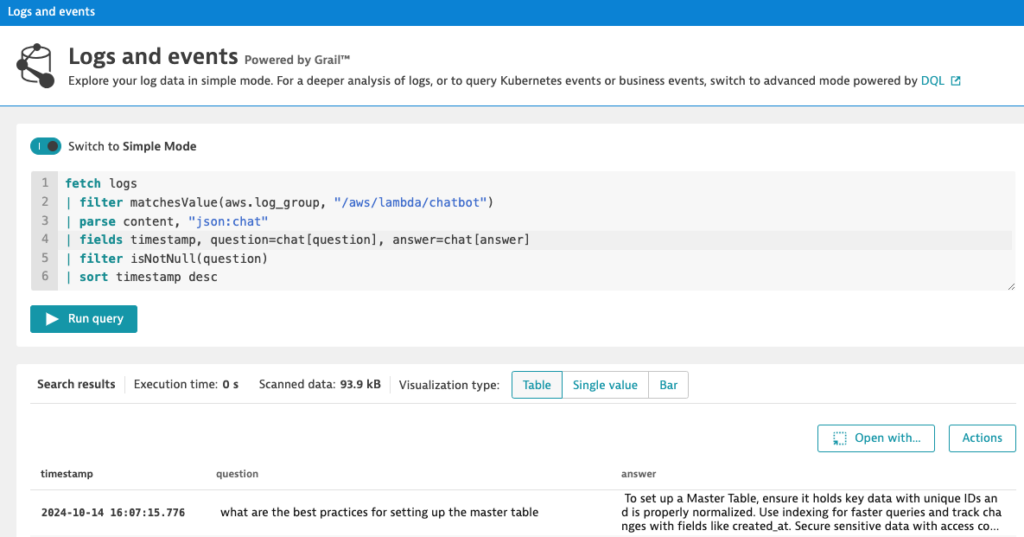

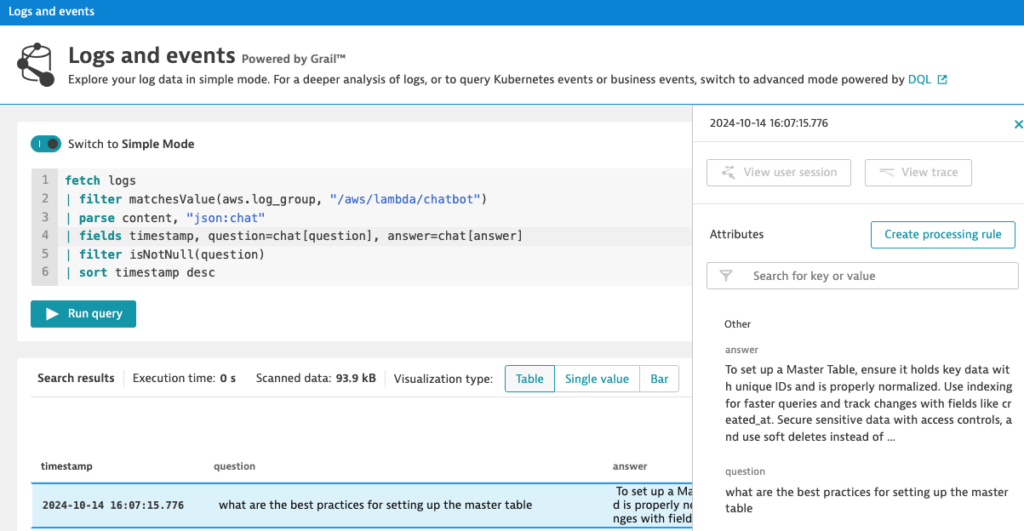

fetch logs

| filter matchesValue(aws.log_group, "/aws/lambda/chatbot")

| parse content, "json:chat"

| fields timestamp, question=chat[question], answer=chat[answer]

| filter isNotNull(question)

| sort timestamp desc

Final Thoughts

Dynatrace Grail offers a powerful solution for modern cloud observability, enabling organizations to unlock deep insights from large volumes of unstructured log data. Its ability to index, store, and query logs in real time, combined with AI-powered analytics, streamlines troubleshooting and enhances decision-making. By integrating seamlessly with existing cloud environments and providing actionable intelligence across applications, infrastructure, and user experiences, Grail helps teams reduce downtime, optimize performance, and gain full visibility into their systems. Its flexibility and performance make it a key tool for driving efficiency and innovation in dynamic, cloud-native environments.

Thank you for reading my blog and I hope you like it!